Cognitive Bias In The Business Of Advertising: Representativeness

This is Part Three of our Cognitive Bias in Advertising series. Read Parts One, Two, and Four.

Excellent meme source: BuzzFeed

Representativeness is a huge category and comprises several sub-types of bias that fall within a similar structure. It is also the primary culprit in many of the mistakes in judgment to which humans are prone.

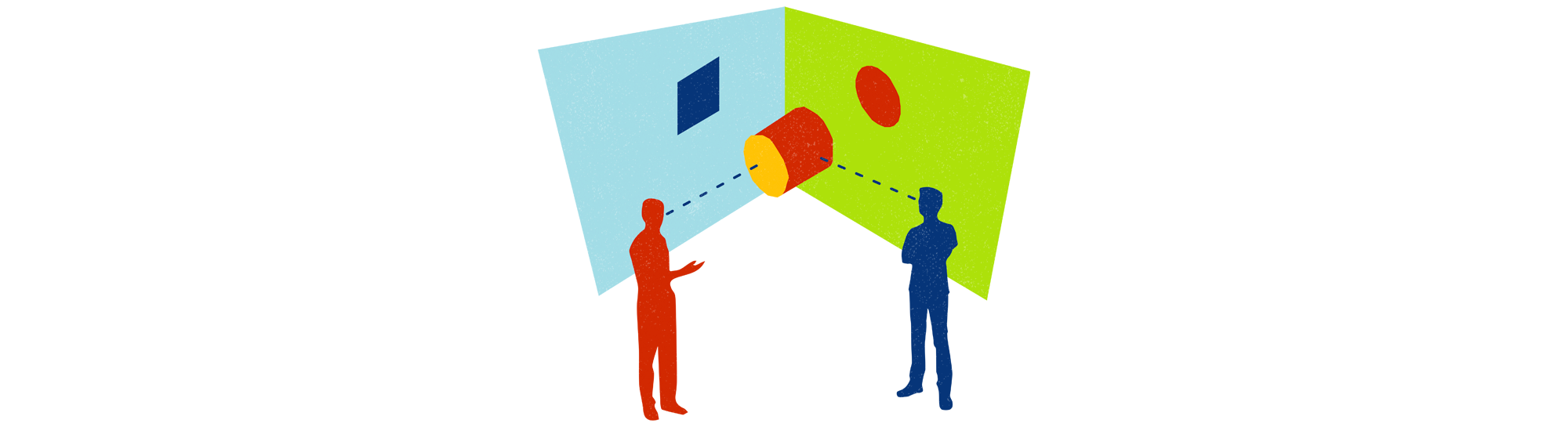

Generally, representativeness is an example of magical thinking, or of using a prototypical example to stand in for a population. "I see a thing, and I imagine every other thing in this category is exactly the same." This happens because heuristic shortcuts in human judgment break (or, more properly, wholly disregard) the laws of probability. What follows is an examination of different types of representativeness.

Ignorance of Base Rate

Because people cannot generally imagine the base rate of occurrence of any given event correctly, and further because the personal observation of any given event destroys a subject's ability to consider that event objectively, people commonly overestimate the base occurrence rate of rare events or qualities, and underestimate the frequency of common events or qualities. This sounds counterintuitive – I saw a thing and so it must be both special and common – but this is the kind of thinking that people cannot help but engage in, and the holding of those two ideas simultaneously is what causes people's judgement processes and abilities to skew.

One aspect of this specific kind of error is to use similarity against prototype to overestimate frequency. So, for example, if you see two ants in two days and both are red, you assume all ants are red.

In a vacuum and using our ant example, this seems a little silly, but when you frame it in the normal context of ignoring base rates – in which you ignore base rates and other evidence in order to fit a preferred narrative – it makes sense. This facet of representativeness combined with availability is how we ended up with the Fake News phenomenon. You see what you are prepared to see, and primary, early errors in judgement spread from a single initial observation to color all future observations and considerations, especially because if I encounter repeated instances of an event that fit a pre-formed narrative, the narrative-specific interpretation of that event becomes more and more available to me over time; I want to see it, so I do.

Conjunction Fallacy

This is how people arrive at false correlation / causation relationships — they assume that multiple specific qualities are more likely than a single general quality, even though this can never logically or rationally be true.

The most famous example of this is the “Linda Problem” posed by Danny Kahneman and Amos Tversky, who created the field of behavioral economics:

“Linda is 31 years old, single, outspoken, and very bright. She majored in philosophy. As a student, she was deeply concerned with issues of discrimination and social justice, and also participated in anti-nuclear demonstrations.”

Which is more probable?

Linda is a bank teller.

Linda is a bank teller and is active in the feminist movement.

Whenever this problem is posed in a formal setting, the majority of respondents choose option 2. However, the probability of two events occurring together (in "conjunction") is always less than or equal to the probability of either one occurring alone.

Tversky and Kahneman argue that most people get this problem wrong because they use representativeness in making the judgment or in assessing the informal statistical probability. Because of how she is initially described – you could think of this as how her characteristics are framed – Option 2 seems more "representative" of Linda, even though it is plainly less likely in terms of statistical probability.

Without belaboring the point, you can see A) how common this error is, and B) how destructive it can be in making good decisions or in attempting to make objective judgments.

Ignorance of Sample Size

This one is fairly simple and extremely destructive: Data is less useful and less predictive when it is drawn from smaller sample sizes. Shorter: Anecdotes are not the same as data.

For example: if our subject has only ever had one experience with a fan of Manchester United FC, but in that one experience the Manchester United fan kicked a dog, then our subject may have a dim view of Manchester United fans in general. Because that story of a Manchester United fan is easily available to our subject, that Man U fan is now representative of all Man U fans, and our subject is ignoring the weight his (very small) sample size should have in judging the moral quality of all Man U fans.

Notice also the conjunction fallacy and other error heuristics at work in concert with representativeness — our subject may believe that it is more likely that all Man U fans kick dogs than it is an aberration. This is how we end up at arguments like “violent extremists within a given religion are not outliers but are instead how everyone in that religion must be.” Multiple specific qualities, disregard of sample size, overestimation of rare qualities, etc.

Dilution Effect

People tend to undervalue and underutilize diagnostic information when non-diagnostic information is also present. This is because, in the judgment process, additional irrelevant information weakens the effect of statistical information, or too much info ruins pointed data that may actually indicate the truth of a thing.

This is why, for example, SEO / SEM shops must be thoughtful about the kind of information presented in a website analytics report. A stray, narrow, interesting tidbit of information (people that come to the website on the weekends read all the way through the About Us page) may weaken a very important aspect of the statistical performance assessment (people that come from Facebook are more likely to convert and buy something) and cause the recipient of the information to reach an illogical conclusion (we should put a free quote form on the About Us page) while drawing attention away from the important fact of Facebook working as a conversion engine.

I mean, it may not end being a bad idea to have a Free Quote form on the About Us page, but the takeaway about Facebook should likely be the focus in this instance, because the decision resulting from a report like this almost always concerns the allocation of resources and how they map to goals.

Also, you may be falsely correlating engagement behavior with conversion behavior, and adding a sales pitch to a well-written corporate history may actually decrease conversion and drive people away. The point is, what the agency needed to communicate was that Facebook visitors convert and buy things, and you can make that true fact less compelling by adding in random, unrelated observations.

This also works in reverse; additional but irrelevant information also weakens the effect of a stereotype or a preconceived notion, in some cases defeating bias. Again, from Kahnemann and Tversky:

Subjects in one study were asked whether "Paul" or "Susan" was more likely to be assertive, given no other information than their first names. They rated Paul as more assertive, apparently basing their judgment on a gender stereotype. Another group, told that Paul's and Susan's mothers each commute to work in a bank, did not show this stereotype effect; they rated Paul and Susan as equally assertive. The explanation is that the additional information about Paul and Susan made them less representative of men or women in general, and so the subjects' expectations about men and women had a weaker effect. This means unrelated and non-diagnostic information about a certain issue can make relative information less powerful to the issue when people understand the phenomenon.

Misperception of Randomness

People incorrectly expect outcomes to even out probabilistically in the short term, and they underestimate the weight or impact of sample size in order to reach a probability event horizon.

The easiest example of this deals with coin flips. If you tell someone that the outcome of ten coin flips in a row are the following:

H H H H H H H H H H

...and then ask them about the probability of the result of an eleventh coin flip, most people will say that it is more likely to come up tails, even though the probability is still a 50 / 50 shot. Respondents tend to think the result is “due” to be tails. Another name for this is the Gambler’s Fallacy. A third way to say this is "this is not how any of this works."

Up next: we start putting it all together.